Recent use cases highlight the huge transformative potential that artificial intelligence (AI) could have on the insurance industry. However, despite examples of how AI is delivering benefits today and the opportunities for the insurance value chain in the near future, challenges still remain to truly integrate AI into the insurance model. Human involvement and ethical development are key considerations for effective adoption and long-term success. In this article, Swiss Re Institute explores the implications of AI in the insurance industry: looking at the key principles for ethical AI applications, examples of its use in insurance, and discussions on the future landscape.

Amidst the recent excitement of large language model (LLM) chatbots such as ChatGPT, the potential benefits of artificial intelligence (AI), as well as the awareness of the possible flaws, have begun to emerge. Most industries, including re/insurance, now race to better understand the potential of commercialising AI.

In four parts, Swiss Re explores what is important for a successful adoption of AI and where it can play a useful role in the value chain: looking at vital elements that AI applications must fulfil to benefit the insurance industry and the countless possibilities it opens up; what it will take to foster trust in rapid machine advancements; and finally what do these impending business changes mean for future generations working in the insurance industry?

Part 1 - How is AI used in business?

Part 2 - Benefits and use cases of AI in insurance

Part 3 - Trustworthy AI – the way forward for insurance

Part 4 - The future of AI in insurance

How is AI used in business?

AI, with its ability to analyse vast amounts of data quickly and its decision making capabilities, is growing increasingly important in terms of insights.

It has the potential to transform businesses efficiency and create new solutions, however the decisions AI takes must be ethical, unbiased, sustainable, and comply with laws and regulations. To create real benefit for society, and the insurance industry, there are many governance, organisational and cultural conditions that applications of AI need to fulfil.

10 guiding principles for AI are:

- Human dignity - AI applications should protect human dignity, rights and fundamental freedoms. AI policy that ensures compliance with requirements including driving fairness and transparency are needed.

- Laws and regulations - AI governance must be consistent with laws and regulations. There should be responsibilities and frameworks to assess and review this throughout the entire life cycle of AI, e.g. with model monitoring.

- Risk management - The risks of AI applications should be mitigated by setting up an adequate analytics and AI risk management framework and related processes.

- Foster explainability - There should be internal and external transparency to the extent permissible under applicable laws and regulations. Explainability should be fostered, where applicable to help stakeholders make informed decisions while protecting privacy, confidentiality and security

- Data protection - Solid cyber security, data foundations and standardised systems are paramount. Consent to use the data, data quality and quantity are among the key factors to succeed with AI.

- Impact on the value chain - Clarity is needed on where and how AI in combination with human processes has a positive impact on the value chain – be it to increase efficiency or enable new solutions and on its costs.

- Understanding of the limitations of AI - Human input might remain invaluable in some critical decisions in the customer journey to ensure digital trust.

- Upskilling existing workforce - Commitment to train and educate staff on the use of new analytics and AI technologies.

- Independent internal or external control - To ensure that the ongoing validation of the algorithms and adjustments is performed independently, an additional independent (internal or external) control function is recommended.

- Continuous dialogue with all stakeholders - The use of AI will likely have profound effects on the insurance industry and society. Therefore, it is necessary to ensure a continuous dialogue with all stakeholders to be able to respond to changing needs and views.

This article is a summary of a four part series written by Pravina Ladva, Chief Digital & Technology Officer, Swiss Re; and Antonio Grasso, Entrepreneur, Technologist, Founder & CEO @dbi.srl, and author. It is reproduced with kind permission of ICMIF Supporting Member Swiss Re.

To access the full in-depth articles, please visit the links below on Swiss Re's website:

Part 1 - How is AI used in business?

Part 2 - Benefits and use cases of AI in insurance

Part 3 - Trustworthy AI – the way forward for insurance

Part 4 - The future of AI in insurance

Published January 2024

Benefits and use cases of AI in insurance

The vision for the future of AI in the re/insurance industry to potentially enable more precise overage and pricing adjustments is an attractive long-term goal, but there are many benefits being delivered today and opportunities for the insurance value chain in the near future.

Current insurance AI applications are based on a narrow type of Artificial Intelligence (see below), which has three main function.

First, it can automate repetitive knowledge tasks (e.g., classifying submissions and claims). Second, it can generate insights from large complex data sets to augment decision making (e.g., portfolio steering, risk assessment). Third, it can enhance parametric products and risk solutions.

Although AI enables re/insurers to become more efficient and offers new solutions, an entire system is required for its use, including human interaction, meaning the added value of AI only comes from the smart combination of AI models and human processes, not just a standalone AI model.

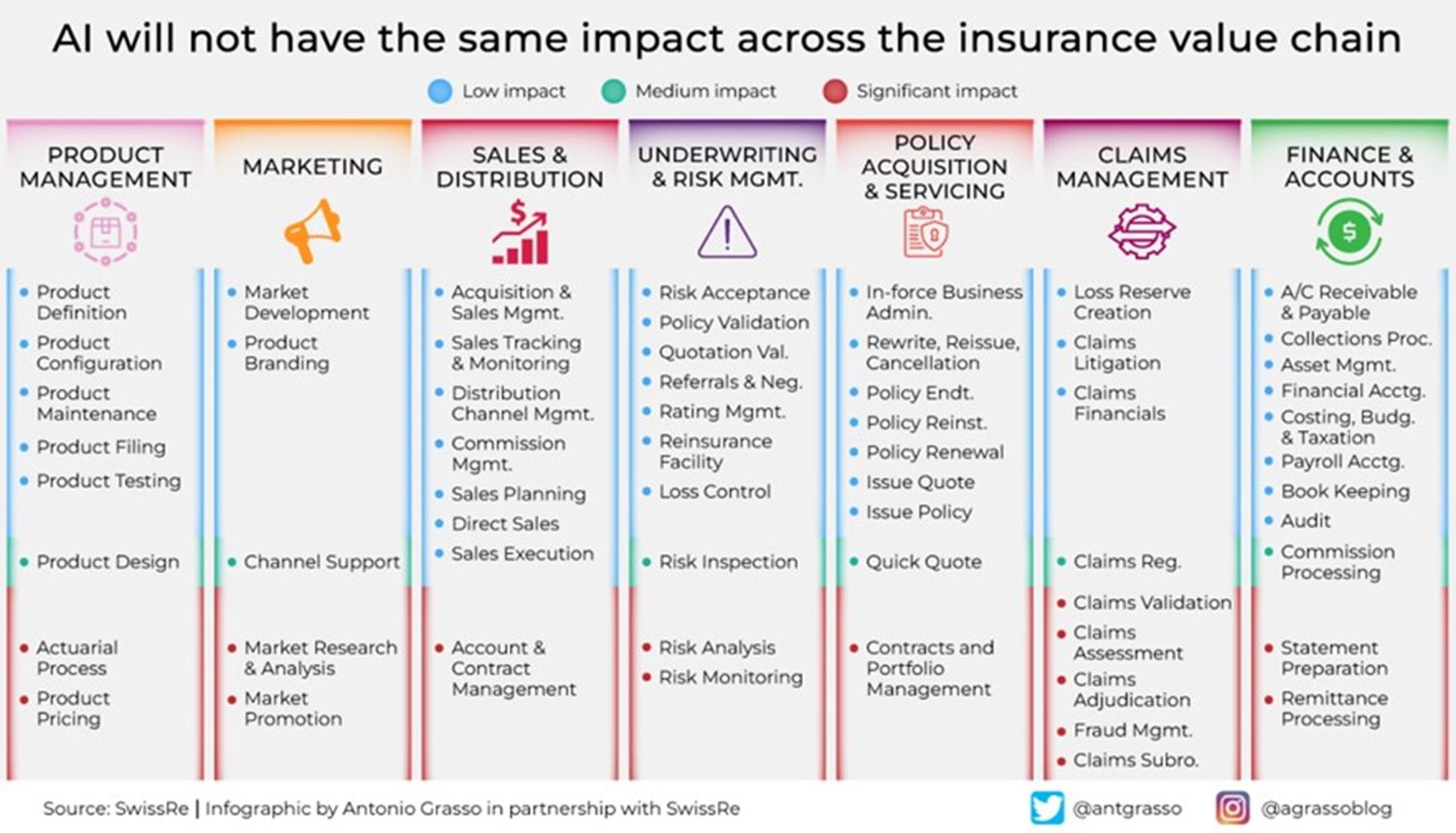

The AI technologies most leveraged in insurance today are Machine Learning, Natural Language Processing (NLP), and Computer Vision. Advanced analytics, and some forms of AI, have been increasingly enriching the insurance value chain for several years and will have a different impact on each stage of the value chain in the future.

AI use cases in insurance

1. Underwriting – improved risk assessment and customer understanding

Re/insurers have access to increasing amounts of data at the time of underwriting. The ability to convert this data into actionable insights is a key competitive differentiator, as it allows for more tailored coverage and pricing for customers. AI techniques such as supervised learning can complement and streamline certain underwriting processes.

2. Claims - improved back-end processes, new products, and coverage for more risks

Not only can AI improve efficiency and insights, it can also enable the development of new solutions and coverage for previously uninsurable risks.

3. Claims - computer vision can reduce car accident fraud and detect driving style

Leveraging the confluence of edge computing and AI, an Italian startup has been granted a patent to record the front visual panorama of a moving vehicle, identify the driver's driving style, and certify accidents by recording dynamics.

Many AI projects are also internally driven and address core processes – for example, using natural language understanding to help ingest and classify unstructured data into decision-making processes or to better understand the exposure in contracts and the overall portfolio.

Trustworthy AI – the way forward for insurance

With the rise of AI generated images and AI chatbots, consumer digital trust has become critically important. For re/insurers, digital trust is essential to business and as re/insurers increasingly improve the re/insurance value chain with advanced analytics and some forms of AI, they need to bring customers along for the ride. Though, where it can be difficult to distinguish the real from the fake – how can trust be won?

Strong AI infrastructure does not correlate with digital trust

Trust in AI is relatively low in countries with advanced levels of digital infrastructure, such as North America, Germany, France and the UK. By contrast, trust in companies using AI and positive perceptions of AI were highest in emerging digital growth markets such as India, Mexico and Nigeria.

Swiss Re Institute analysis suggests there is a gap between what institutions think is important to build digital trust (such as digital infrastructure or AI capabilities) and what really matters to consumers (a sense of purpose, incentives, ease of use, and transparency of AI models).

Re/insurers can gain trust by explaining the benefits of data sharing with consumers, as well as structuring functionality, navigation and search functions to facilitate ease of use and understanding. However, this will not be sufficient for all customers as trust is also shaped by psychological and cultural factor. Understanding and acknowledging some of these barriers to trust can help re/insurers overcome them.

Informed decisions are based on transparency

Trust in AI solutions requires transparency with consumers. Fostering explainability helps stakeholders make informed decisions while protecting privacy, confidentiality and security.

AI will require entire value chains for its effective use, including human interaction. The added value of AI will only come from a smart combination of AI models and human processes, not just a standalone AI model.

The future of AI in insurance

What do these impending business changes mean for future generations working in the insurance industry?

Unchanging core purpose

Despite the transformative potential of AI in the re/insurance industry, one constant remains: people's need for risk transfer and mitigation solutions. AI may enable new solutions and business models, but it cannot alter the core mission of our industry. Hopefully, AI will help in closing the protection gap more efficiently.

Currently, the industry is in the early stages of adopting AI, primarily employing narrow AI for specific purposes like automating parts of the underwriting processes without decision making. Many large insurance companies are experimenting with LLMs such as GPT, though they are not yet fully deploying them. These models can have a significant impact on the re/insurance industry, not only by automating processes, but also by automating tasks such as code generation and analytics. More and more AI use cases are emerging in the industry.

Shaping the future of AI now

The future of ethical and beneficial AI depends on actions taken today. Full enterprise maturity requires addressing various risks associated with LLMs and Generative AI.

There are risks associated with Generative AI relevant for the insurance industry. For example, Generative AI has unresolved intellectual property issues regarding training, its outcome and the creation of "plausible” content. The same goes for data privacy. The collection of the large data sets with the intent of producing something new from that material raises questions around rights to the underlying data, and also concerns about data quality and biases.

In order to guarantee that AI fulfils its future potential, it is important to actively shape the technology now. As an industry, we have an obligation to drive the development of ethical AI that benefits society, ensuring it aligns with our values and needs.

As we navigate the immense promise of AI, it is crucial to recognise the pivotal role humans play in harnessing its power so that future generations can benefit from AI applications. With AI technology still really only in its infancy, we have an opportunity to forge a path in which AI enhances our work while preserving our core purpose in the ever-evolving world of insurance.

As we move further into the era of generative AI, it's important to remember that AI is, at its core, a reflection and amplification of our human thinking. While this sophisticated technology is capable of creating content that appears original, it is fundamentally an echo of our input. It is not an independent entity but a tool we have developed to transform our thoughts, knowledge, and creativity into new forms at a scale and speed far beyond our capabilities.

Navigating the ethical landscape of generative AI

Within this premise, essential questions arise around generative AI, encompassing data ownership, quality, potential bias, and misuse. Far from being purely technological, these challenges serve as a mirror that reflects our ongoing societal and ethical struggles. Therefore, our approach to addressing these concerns requires advanced technical solutions and deep introspection about our collective values and guiding principles.

The illusion of synthetic thought

As we navigate this ethical landscape, we encounter the notion of synthetic reasoning, which is often attributed to AI. It's important to understand that what may appear to be synthetic thinking is actually a reconfigured version of our human reasoning, shaped by the training data we provide. AI doesn't create knowledge; it reshapes and remixes the human knowledge it's trained on. It's our thoughts, amplified and modified by algorithmic patterns.

Harnessing the immense power of generative AI

Recognising this reshaping of our thinking underlines the transformative power of generative AI, which is as broad as deep. This technology has the potential to revolutionise efficiency, drive innovation and streamline processes across numerous sectors. To fully realise these benefits, however, we must thoughtfully guide the development and application of generative AI, maintaining a comprehensive understanding of its potential and limitations to ensure its responsible, strategic use.

Balancing risks and opportunities

While it's true that generative AI poses significant challenges, I firmly think that the potential benefits far outweigh the risks, as I believe that the gains in productivity, creative problem solving, and resource management that can be derived from AI can drive societal progress and economic growth on an unprecedented scale.

Moreover, by recognising AI as a digital extension of our thought processes, we allay fears of AI as an independent entity, allowing us to maintain control over this technology and align its development with our collective ethical and societal norms. Finally, generative AI has the potential to democratise access to information, services, and resources, enabling widespread access to services once limited by human capacity.

As creators and stewards of AI, we are responsible for ensuring that this digital manifestation of our knowledge benefits the collective. In doing so, we shift the narrative toward the limitless potential of generative AI and away from the predominantly fear-driven perspectives. This belief underpins my conviction that the benefits of integrating generative AI into our society far outweigh the risks.