In this excerpt from Gallagher Data and Analytics Leader Don Price’s "View from the Industry" on data science, published in full in Gallagher Re’s Global InsurTech report for Q3 2023, we explore potential use cases and the bear traps that cedants should be aware of.

Large language models (LLMs) have undergone many advancements and iterations to reach the sophistication they possess today and, as a result, now offer significant business potential — but the risks still need to be carefully considered and managed.

Early models pioneered a method for considering the sequence of words in future predictions. Enhancements, such as word associations and identification of grammatical structure, have allowed LLMs to interpret the meaning of sentences, while the explosion of publicly available data has allowed for robust training datasets that produce complex, sophisticated models.

As a form of generative artificial intelligence (Generative AI), LLMs operate as a text completion tool, predicting the next word using percentages of likely words. Current methods LLMs use allow a document to retain meaning because LLMs use the full body of data in succession to determine subsequent context. The model incorporates a degree of randomness in word selection, making it capable of sounding human-like.

LLMs use prompt engineering: in other words, users are told to ask the model questions. These prompts are then used to generate responses from the LLM. However, for larger process deployments, data scientists are still needed to develop the necessary code, monitor performance, and retrain models.

Model explainability is technically challenging. Companies like Gallagher have made early progress in finding ways to "cite sources" and enhance the transparency of LLMs, so they are less of a black box.

LLMs can be used in various scenarios, including written communication. They can assist in generating automated responses in customer support chats or to provide concise summaries. But they have limitations.

They are not yet ready for reasoning, understanding the context for technical or domain-specific content, or for accurately interpreting less straightforward features of human language, such as sarcasm.

This article was a collaboration between Gallagher Re, Gallagher, and CB Insights, led by Dr. Andrew Johnston, Global Head of InsurTech, Gallagher Re; Irina Heckmeier, Global InsurTech Report Data Director, Gallagher Re; and Freddie Scarratt, Deputy Global Head, InsurTech, UK, EMEA & APAC.

To download the full report Gallagher Re 2023 Global InsurTech Report click here. The article is reproduced with the kind permission of ICMIF Supporting Member Gallagher Re.

Published February 2024

Understanding the potential

Companies are eager to explore the potential value of LLMs. But assessing these models requires partnerships between process owners, data science and Information Technology (IT). Companies need to first identify their objectives, test solutions and work through how best to deploy them for maximum value — and recognise that realizing value isn't going to occur immediately.

Successful adoption is a challenge, but early evidence in Gallagher’s research suggests there's value for those willing to make the investment.

Within (re)insurance, Gallagher sees numerous opportunities to improve the efficiency and quality of customer service, provide recommendations regarding risk mitigation strategies, and effectively manage the cost of claims. From insurance policies to claims documents, LLMs can help summarize, interpret, and explain content.

This information can be a powerful aid to our experienced brokers and service agents in providing the best solutions to Gallagher’s customers.

The opportunities from LLMs that Gallagher is evaluating are not too dissimilar to our established data science practices. But we expect the LLM to be more quickly scalable. For instance, our own LLM —Gallagher AI — was written to provide an internal, secure environment for employees to use the technology without exposing sensitive, proprietary data.

Ideas for using LLMs are being sourced from employees at all levels and from all functions. Feasibility studies are being conducted for complex opportunities, and several smaller projects such as language translation applications were implemented for quick cost savings.

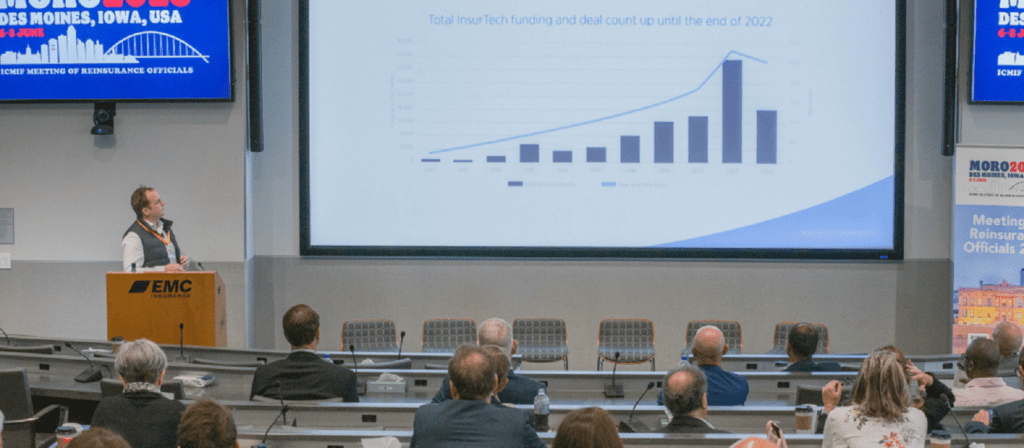

The energy and excitement around LLMs has been tremendous. Marketing efforts and news coverage have generated significant hype, and this heightened interest has led to increased venture capital funding into companies associated with generative AI solutions, totaling USD15 billion so far in 2023. Firms such as Nvidia, Microsoft, Google and Palantir have experienced a surge in stock prices.

We're optimistic our businesses will find value, but it's still too early to draw firm conclusions.

The risks of generative AI

Companies should recognize the risks associated with LLMs before adoption. Like any predictive model, they can make mistakes, with model errors often described as ‘hallucinations’. Businesses need to fully test models before using them and understand why they work. They should monitor their use to ensure recommendations remain valid over time.

The training of LLMs also poses some legal and regulatory risks. Models have been trained on large amounts of text available on the internet, and in some areas and countries, legal challenges to using this data may pose questions. While we don’t currently see this as an existential challenge to LLMs, companies may have to modify any processes and solutions based on these models in the future.

Evolution, not revolution

While the exact business value generated from these generative AI models is yet to be determined, upfront investments will be needed to quantify potential, develop pilots, and implement solutions. LLMs are not a revolutionary solution that can solve all problems. However, at Gallagher, we see the potential to strengthen our sales and consulting experts — equipping them with better insights with which to solve customers’ needs.